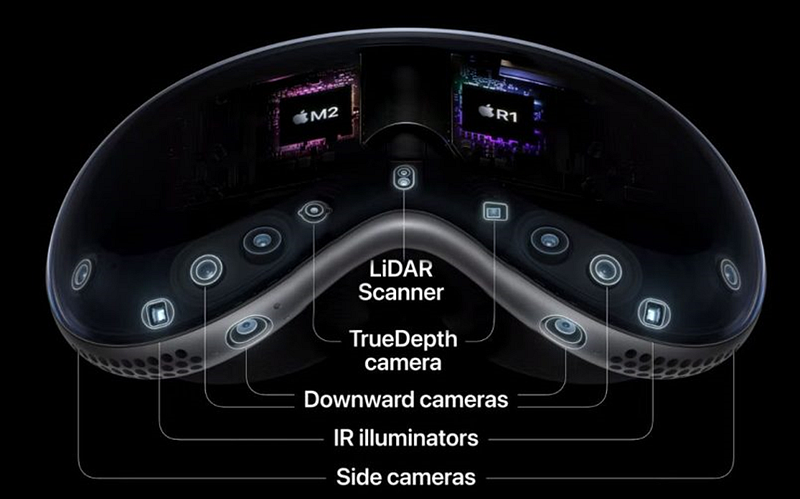

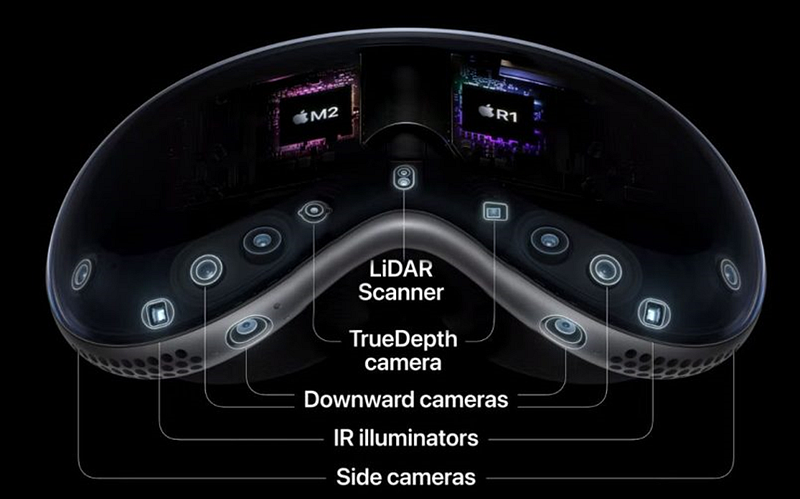

The Apple Vision Pro device is equipped with multiple sensors to enhance its depth perception capabilities and create a seamless virtual and real experience in three-dimensional space. The visual sensors included are RGB camera, infrared camera, dToF LiDAR, structured light camera, and fisheye infrared camera.

The exterior of the Apple Vision Pro features:

- 2 forward-facing RGB cameras for forward shooting and VST (Virtual Spatial Tracking).

- 4 fisheye infrared cameras facing side and forward for 6DOF (Six Degrees of Freedom) tracking.

- 2 downward-facing infrared cameras for torso tracking and gesture tracking below.

- 2 infrared lasers that emit infrared light to illuminate the torso, legs, knees, hands, and the surrounding area within the control range, assisting the infrared and fisheye infrared cameras in capturing active elements in those areas.

- 1 dToF LiDAR laser radar, similar to the one used in the rear camera of iPhone Pro, supporting 3D shooting, spatial reconstruction, depth perception, and positioning.

- 1 structured light camera, also known as a TrueDepth camera, similar to the front-facing structured light Face ID on iPhones. It supports face scanning in FaceTime applications and precise gesture tracking in the forward area.

Inside the Apple Vision Pro, there are 4 infrared cameras and a circle of LEDs. It is speculated that the structured light scheme’s light field information is used for eye tracking and eye expression analysis.

Apple utilizes a variety of sensor hardware in the Apple Vision Pro, distinguishing it from other VR manufacturers. Apple incorporates LiDAR and structured light sensors, eliminates the need for handheld controllers, and relies on gesture tracking for interaction. This combination, coupled with Apple’s excellent UI interface effects, has led to outstanding performance compared to similar hardware devices. Initial users of the Apple Vision Pro have highly praised most of its functions.

Apple has been using Sony’s dToF LiDAR technology in its products, such as iPad Pro, iPhone 12 Pro, iPhone 13 Pro, iPhone 14 Pro, and the upcoming Apple Vision Pro. The dToF LiDAR was developed by Sony and exclusively used by Apple. Apple’s interest in 3D sensing can be traced back to its acquisition of PrimeSense, an Israeli 3D sensing company, in 2013. Over the years, Apple has accumulated technology and expertise in the 3D sensing field, leading to the release of the dToF LiDAR solution in 2017 and 2020. The dToF LiDAR is used in combination with the rear camera on the iPad and iPhone 12 Pro. The LiDAR module’s shape in the Apple Vision Pro device is similar to the dToF LiDAR module used in earlier products. It is speculated that the LiDAR’s core receiver chip is the IMX 590, a custom chip co-developed by Apple and Sony. However, future revisions may occur due to Sony’s actions. Sony aims to replace the light-emitting VCSEL chip with its self-developed chip, possibly integrating VCSEL and Driver into VCSEL-on-driver form for improved performance and power consumption. The dToF LiDAR enables various 3D applications and special effects, with both third-party developers and Apple working on leveraging its capabilities. Apple has integrated the LiDAR into its iPhone imaging system, adding a “light distribution map” to the ISP layer processing. The depth information provided by LiDAR is utilized in autofocus and becomes a basis for subsequent layer rendering and other processing. In the Apple Vision Pro device, the LiDAR can perceive the 3D spatial structure of the surroundings, allowing for real-time updates and applications in different functions.

The Apple Vision Pro device incorporates VST (Video See Through) technology, which is important to understand before exploring its related functions. The Apple Vision Pro is an MR (Mixed Reality) device, distinguishing it from VR (Virtual Reality) and AR (Augmented Reality) glasses. MR devices provide an immersive VR experience while allowing users to see the outside world without removing the headset.

MR devices, like VR devices, have a similar physical form. However, the MR device includes a VST function that enables viewing of the external environment. Many VR devices on the market also have camera perspectives and safety boundaries, but they typically provide low-resolution and low-frame-rate video of the environment. When a VR game user moves too far from the initial position and crosses the safety boundary, the game screen disappears and shows the outside world captured by the camera (or infrared camera) instead, ensuring the player stays within a safe area.

Apple Vision Pro uses the front-facing camera to capture the real external environment and employs VST technology to display the captured image in the left and right eye displays. The device features a physical knob on top, allowing users to switch between different working modes. Users can choose whether to see the external environment by switching modes using the physical knob. The real external environment displayed through VST is a real-time image, and the virtual interface and models are fused with the virtual image through the visionOS system. It’s important to note that what the user sees through Apple Vision Pro is not a completely real outside world but a projected display in their eyes.

To achieve optimal fusion of VST and the virtual interface, as well as accurate projection of virtual shadows, LiDAR provides depth information of the external environment for achieving the best perspective relationship.

Apple Vision Pro supports interactive VST and external screen modes. Additionally, Apple demonstrated the automatic combination of VST and immersive mode, gradually displaying people and their surroundings as someone approaches. This mode also displays the user’s eye images on the external screen, allowing for a sense of interaction with the outside world while wearing the head-mounted display device.

The Apple Vision Pro features a 3D Camera function that records videos with 3D information, offering a unique and immersive experience. This application allows users to capture and preserve their precious 3D memories. Similar to watching 3D movies, the two RGB cameras on the front of Apple Vision Pro simultaneously capture images or record videos, which are then displayed separately to the left and right eyes through the device’s displays. This creates a strong 3D perception without the need for binocular depth.

When viewing the 3D images captured by Apple Vision Pro, the left and right eyes observe them from two different angles, and the brain naturally fuses the images to create an immersive 3D effect. This effect is achieved without relying on LiDAR or binocular depth. However, in order to ensure a proper perspective ratio and anchor the 3D images to the ground and the desktop, the 3D Camera function collaborates with LiDAR. LiDAR helps project the images onto the VST screen, resulting in a lifelike scene restoration.

Furthermore, third-party companies have developed similar 3D applications based on the RGBD fusion data of the dToF LiDAR. For instance, an application called RECORD3D utilizes LiDAR to record depth information, enabling iPhone Pro to synthesize 3D videos by combining the depth point cloud information with the Photogrammetry algorithm. This combination can even generate 3D models, as demonstrated by the Polycam application.

Occlusion function is an important feature of Apple Vision Pro that ensures a realistic and seamless integration of the surrounding environment with the virtual interface in VST mode. Currently, the user’s hand displayed in VST is generated through cutout, resulting in a slight blurring of the border and a sense of shaking. To achieve the best spatial perspective relationship, the hand, interface, VST environment, and VST background need to be sorted and maintained in a stable relative relationship with a smooth transition process.

When using MR glasses, the user’s hand appears closest to the MR device, with the virtual interface displayed in the blue area and the external real environment captured by VST displayed in the gray area. To ensure the correct positional relationship between the layers and the correct appearance of the hand in front of the blue area, LiDAR plays a role in forming the occlusion relationship. This can be done by creating a dense point cloud depth map through RGBD fusion with dToF LiDAR and RGB cameras or by reconstructing the entire 3D space using LiDAR and front-facing cameras.

Hand capture and tracking are possible within the infrared camera’s panoramic range, not limited to the structured light camera’s field of view. Gesture tracking is primarily done by the infrared camera, while the establishment of the occlusion relationship is handled by LiDAR. There is a fusion logic between multiple sensors to ensure accurate tracking and occlusion effects.

The good 3D visual experience provided by Apple Vision Pro can be understood from the perspective of mammalian evolution. Panoramic vision is a defensive strategy observed in herbivorous animals, allowing them to detect predators approaching from any direction. On the other hand, predators such as ligers, wolves, and leopards have evolved binocular vision, which enables them to judge distance relationships and plan attacks based on baseline and binocular parallax.

Near-field display devices have a significant impact on our natural 3D vision in real environments. Some head-mounted displays can cause discomfort due to a lack of synchronization and accuracy between the displayed images and our body perception. This can lead to conflicts in the brain’s judgments, resulting in dizziness, fatigue, and other adverse reactions.

One cause of 3D vertigo is the conflict between the brain’s judgments based on vision, vestibular cues, and body perception. When the body moves but the image doesn’t change or vice versa, it creates a mismatch that confuses the brain and leads to discomfort. Technical limitations in refresh rate, rendering, and positioning contribute to this issue, but devices like Apple Vision Pro have made improvements to alleviate these problems.

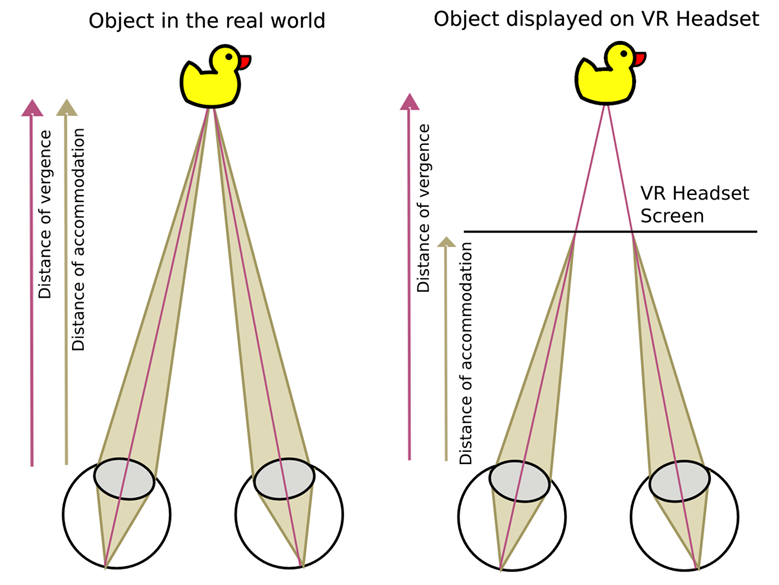

Another factor is the Vergence-Accommodation Conflict (VAC). Our eyes focus differently for objects at varying distances, but headsets keep the focus fixed on a virtual screen. This conflict between visual parallax and focus blur causes depth perception conflicts and visual fatigue.

LiDAR plays a role in reducing the VAC phenomenon in Virtual Spatial Technology (VST). By scanning objects in the field of view and obtaining real-time distance information, LiDAR helps simulate refocusing processes in the virtual environment. This ensures that objects are appropriately blurred and aligned with the viewer’s focus, making the experience more realistic and reducing discomfort.

Overall, understanding the principles of the brain and leveraging technologies like LiDAR can help improve the natural 3D vision experience and minimize discomfort associated with head-mounted display devices.

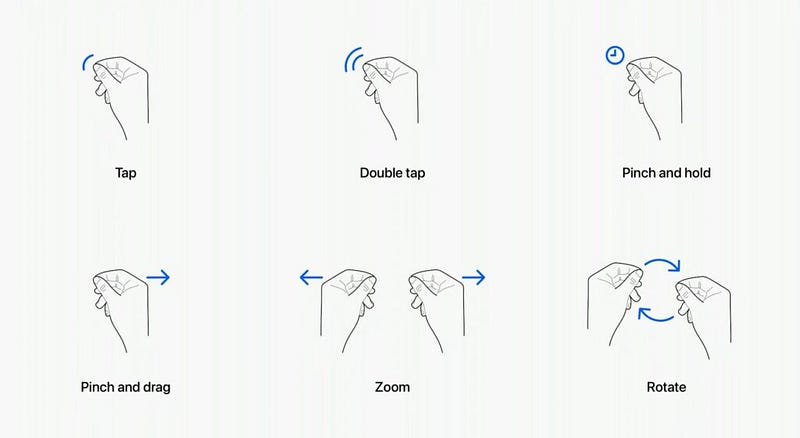

In this groundbreaking computing paradigm, physical touch is no longer necessary. Using Vision Pro’s cameras, intuitive gestures allow for effortless interaction without handheld controllers. This paradigm shift rivals the impact of the first mouse and the first iPhone. With no need for physical input, the constraints of a fixed-sized display are eliminated. Vision Pro can simulate resolutions exceeding 4K up to 100 feet away.

In 2023, the competition in the augmented/mixed reality field has intensified with new and established players launching innovative products and platforms.

Apple is betting early on the transformative potential of augmented/mixed reality, aiming to revolutionize how we interact with the world and enhance productivity and everyday life. The Vision Pro device showcased its versatility for various use cases such as remote work, entertainment, and immersive travel. While gaming is possible on the Vision Pro with Apple Arcade, it’s currently not a primary focus.

Meta’s Quest Pro headset is designed as a professional tool, emphasizing multitasking, creation, and collaboration. Apple envisions the Vision Pro as a constant companion, while the Quest Pro serves as a virtual office environment. Meta’s other headsets, Quest 2 and Quest 3, offer affordable entry-level options for gaming enthusiasts and newcomers to virtual reality. While gaming is their main focus, they also provide options and apps for augmented reality and the metaverse.

Valve Software’s Index is a high-end headset tailored for gaming and remains popular for playing VR games through Valve’s Steam platform. Valve has also demonstrated its commitment to modders and tinkerers with the recent introduction of the portable gaming PC, the Steam Deck.

Sony prioritizes VR gaming with its PSVR2 headset. Although media apps like Netflix can be accessed, gaming remains the primary focus.

Apple Vision Pro is a groundbreaking device that establishes a new standard for AR/VR/MR/XR experiences with its unmatched performance. However, for the realization of the “Metaverse” dream, it is crucial to reduce hardware costs, particularly those associated with perception hardware.

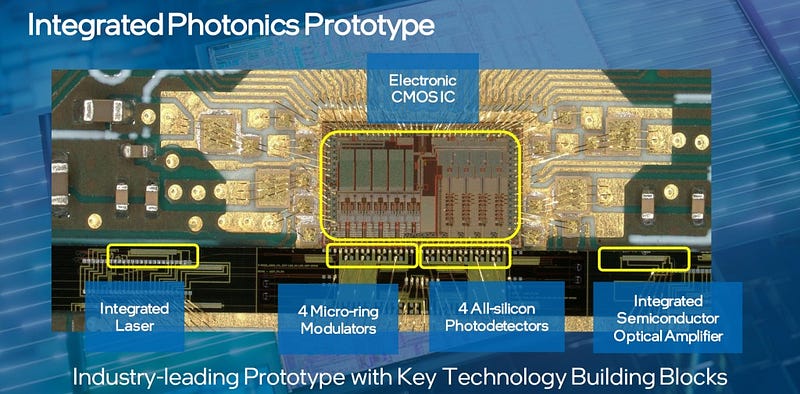

Moore’s Law has demonstrated that semiconductor integration plays a pivotal role in driving down costs. In this regard, silicon-photonics technology holds immense potential for integrating multiple discrete photonics chips, including laser illumination and transceiver chips, into a single monolithic solution. This advancement would contribute significantly to cost reduction and enable further innovation in the field.

Reference

- https://mp.weixin.qq.com/s/xmb4AiELJL26Sst9d6JAVw

- https://staceyoniot.com/love-it-or-hate-it-the-apple-vision-pro-is-a-game-changer/

- https://www.geekwire.com/2023/analysis-apples-vision-pro-sets-up-a-clash-with-valve-sony-meta-over-future-of-vr-ar/

- https://www.apple.com/apple-vision-pro/

- https://venturebeat.com/business/intel-advances-in-silicon-photonics-can-break-the-i-o-power-wall-with-less-energy-higher-throughput/